Introducing Worker Threads

HarperDB 4.1 introduces the ability to use worker threads for concurrently handling HTTP requests. Previously this was handled by processes.

This shift provides important benefits:

- better control of traffic delegation with support for optimized load tracking and session affinity

- better debuggability

- support for alternate storage models

In this post, we want to explain this approach, not only to understand how HarperDB works, but also because this technique is broadly applicable in JavaScript HTTP servers.

Understanding What We’re Up Against

Modern CPUs feature many cores. Fully leveraging the capabilities of the CPUs inherently requires high levels of parallelism. While Node’s asynchronous I/O functionality is capable of offloading some load to separate threads, this usually does not significantly increase parallelism in core usage and is generally insufficient for saturating even two cores, much less the many cores of high end machines.

Typically in NodeJS, the most common path for scaling HTTP/web servers to achieve greater parallelism is through a cluster of processes (using the cluster module). The cluster module conveniently starts multiple processes with a shared port, which allows the operating system to then delegate incoming connections (requests) to each of the available processes. This is easy to set up and it works reasonably well. However, because the operating system controls the delegation, there is no ability to control and tune this delegation for better behavior and performance.

Processes vs Threads

Comparisons come with pros and cons on both sides.

A benefit to using processes is that processes can crash without bringing down other processes. Although, to counter, it is possible for a threaded-model to have a manager process that resets the main process if it crashes. And in HarperDB, crashes have proven to be a very rare and exceptional situation.

A downfall for processes and a win for threads is in resources. Comparatively, threads require less resources than processes. Every aspect of the Node.js runtime must be duplicated in every extra process. While worker threads are able to reuse important parts of the runtime including the JS runtime core functions, lmdb-js’s core instance, and perhaps most importantly, the worker pool for async tasks. Speaking of the worker pool, if you start an HDB server with 20 processes, each process generally has a pool of about a dozen threads each. This means that there are hundreds of threads in total, and these threads have pretty low utilization. On the other hand, with worker threads, the worker pool for async tasks is shared, and in a process with 20 worker threads, this just adds the central pool of 12 threads for async tasks (which can be increased if needed). This provides a much more efficient use of memory and threads.

Don’t Just Take Our Word for It

In fact, NodeJS’s own cluster document states that worker threads are actually more efficient. What the documentation does not state is how to actually use workers for sharing the workload from a single listening port. This requires a bit of OS-specific trickery and internal access.

OS-Specific Trickery and Internal Access

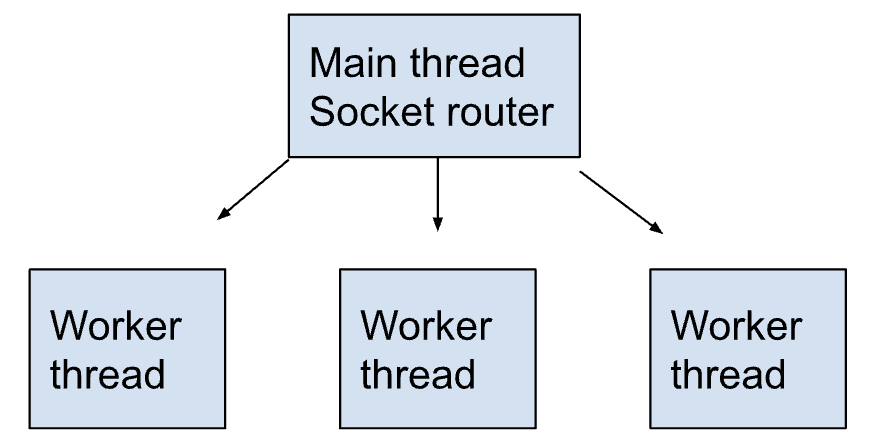

At a high level, this is done by setting up the main thread (a single thread) to be the main listener to a network port. As connections are received, the main thread can then delegate the connections to a set of worker threads to actually parse the HTTP requests and respond to them.

This requires very little overhead from the main thread, and can be done very efficiently on unix operating systems. Sockets all have a corresponding file descriptor (a number), and a handle that is used to refer to them. When incoming socket connections are made on the main thread, we can get the file descriptor number, decide which worker thread to send the connection to and send that file descriptor to the worker thread. The worker thread can then use the file descriptor to instantiate a new Socket instance. The Socket instance can then be delivered to an HTTP server instance in the worker threads to commence with the full HTTP parsing and processing (where all the real work happens).

Setting up Main Thread with Socket Routing

First, let’s look at how we set up a listening port in the main thread. An important note is that it is valuable to start the socket paused. This ensures that the main thread does not consume any data from the socket, and the entire process of reading from the socket can be handled by the worker threads (it is possible to read from the socket and then delegate, but this is more complicated, and requires passing the read data to the worker thread). We also allow half-open sockets since this is permitted in HTTP:

Here we are setting up a socket listener that listens on port 80, with the appropriate configuration. Now we have a listener, and here is where we implement the appropriate logic to decide which worker to delegate to. In HarperDB we have a couple strategies available. One strategy is to monitor workers by periodically checking the idle time using worker.performance.eventLoopUtilization().idle. Then we allocate requests to workers based on using a ratio derived from the expected availability from the current idle times.

Another strategy–HarperDB also supports affinity based routing using remote IP addresses. With this option, once a connection is received from a certain remote IP address, it is recorded and all subsequent connections from that IP address will be delegated to the same worker.

This type of session/address affinity has numerous advantages:

- Better caching locality for operations within workers

- Fairer delegation (one device/user doesn’t overload multiple threads)

- Better behavior for in-memory sessions (one thread can keep data in memory for continued requests from a user from one ip address). If a request comes in from a new/unseen IP address, it falls back to the idle strategy.

The full details of these strategies is beyond the scope of this post, but here we will simply say that our workerStrategy function chooses the best worker to delegate a connection to. We then access the file descriptor from the connection to send to the worker. This requires a little bit of hacking into private (underscore prefixed) properties of NodeJS. Caveat emptor! (Of all the things that might break in future Node versions, this doesn’t worry us much though). Here is a basic version of our socket listener:

Worker Thread Receiving Socket

The worker threads now need to be set up to receive these inter-thread messages and deliver the sockets to their HTTP server instance. Each thread will start its own HTTP server instance. Let’s setup an HTTP server, the basic template is something like this:

Many of us may not be actually using the direct NodeJS HTTP interface, but may be using a web/HTTP framework. We use Fastify in HarperDB, for example, but Koa and Express are popular too. Once you have set up a Fastify server, there is a `server` property that provides you access to the underlying NodeJS HTTP server instance. Note: we do not need to listen to any socket here; we will actively deliver sockets so no listening is necessary. In fact, trying to listen would cause a problem since multiple threads can’t listen to the same port. If your framework does require specifying a port, you can use port 0, which does not allocate a static port listener.

Once we have a reference to this server instance, we can proceed with delegating connections to it. We listen for messages from the main parent thread, and make sure the message is a socket message with a file descriptor (we have other messages that can occasionally be sent between threads, so we want to ignore those). We then construct a Socket instance and then deliver that to the HTTP server by manually triggering the “connection” event:

Once you have done this, the HTTP server in the worker thread will start reading the data from the socket, parsing, and delegating the requests to your application (and through your framework if you are using one). Likewise the responses will be delivered through the socket since the worker thread and HTTP server now have full control of the socket.

Sockets in HTTP may also employ keep-alives where a single socket can be used for multiple requests (even multiple in-flight if pipelining is used). Since the socket has been delivered to the HTTP server, this is all handled seamlessly. Likewise the HTTP server can also handle upgrading to HTTPS, HTTP/2, or even WebSockets. All it needs is just the sockets!

The Windows Caveat

As mentioned before, a lot of this involves operating system specific trickery. And fortunately file descriptor based socket routing works well across unix operating systems and MacOS. But, it does not work on Windows. Unfortunately, there seems to be no clean efficient way to do this type of socket routing on Windows. As a fallback, we proxy sockets to worker threads by reading every byte of the incoming socket and sending messages with every chunk of data to the worker thread and then likewise the worker thread must message each chunk of data for the response back to the main thread to deliver the response. While this is not very efficient, it does provide a working fallback for Windows. HarperDB recommends that Windows only be used for development purposes, so this fallback is an adequate solution.

More Advanced Routing

Reading from Sockets Before Routing

As mentioned before, in this implementation we are careful to start sockets paused and not read any data in the main thread. This makes it easy to just pass the socket directly into the HTTP server. For more advanced routing, there may be a desire to actually read from the socket. For example with true session affinity based on a session identifier from a cookie, it is necessary to read data from the socket to read the cookie. Since this data is consumed, it would then be necessary to send this consumed data to the worker thread, and manually create a synthetic event on the socket in the worker thread to emulate re-reading this data for the worker’s HTTP server instance. This also takes a little bit of low level magic. In order to read header data from a socket and ensure that no further data is read from the socket before delegating to a child thread, you need to call socket._handle.readStop():

And in the child thread, after emitting the socket:

And from here you parse the headers. Which is where we leave you, as that takes this piece out of scope. Please note that this is assuming HTTP (no SSL); this is significantly more complicated with HTTPS/SSL.

Summary

HarperDB has switched to threads beginning with v4.1 with good reasons. Thread-based HTTP concurrency has provided significantly more control over routing for HarperDB, better memory/resource utilization, along with improved debugging that is afforded by using native worker threads. We hope this post can help you implement worker threads for HTTP processing in your project as well!

.png)

.png)